This post is about the process from “first contact” (with a Streamie user) to getting a new feature out the door. I posted about this here while doing the work.

I received a phone call to the Streamie support number from a Director of IT in a public school district. Their video security system in this one school consisted of an amalgamation of ONVIF / RTSP, Ubiquiti and Rhombus cameras — some 60+ cameras in all. And they wanted them all of them on each of several video walls. I still question the usefulness of these postage-stamp sized video streams, but to each his own!

Rhombus does not offer RTSP support, he explained, but they do have a LAN-based featured called Secure Raw Streams. He emailed me a link later on.

I got his Streamie account upgraded so that he could start testing Streamie with all of their non-Rhombus cameras on video walls (Apple TVs). While I agreed to take a look at SRS and assess the viability of adding support to Streamie.

I decided to email Rhombus and ask if they have some sort of online demo system that I can use for SRS streaming. I’ve never interacted with Rhombus before, so I wasn’t sure what this would yield. The IT guy mentioned on the phone yesterday that he has a sale engineer contact at Rhombus who could assist. Helpfully, he emailed him as well and got the ball rolling.

In the mean time, I need some data to work with, so I turned to Big Buck Bunny, a short film that my kids have watched probably 100s of times because I’ve used it for demo purposes with WebRTC work and now this. Oh great ChatGPT, tell me how to extract just the H.264 data from this container file, using ffmpeg on the command line, if you please:

So at least now I’ve got something to get started with. How this might vary from what SRS actually looks like, I dunno, but it can’t be TOO different.

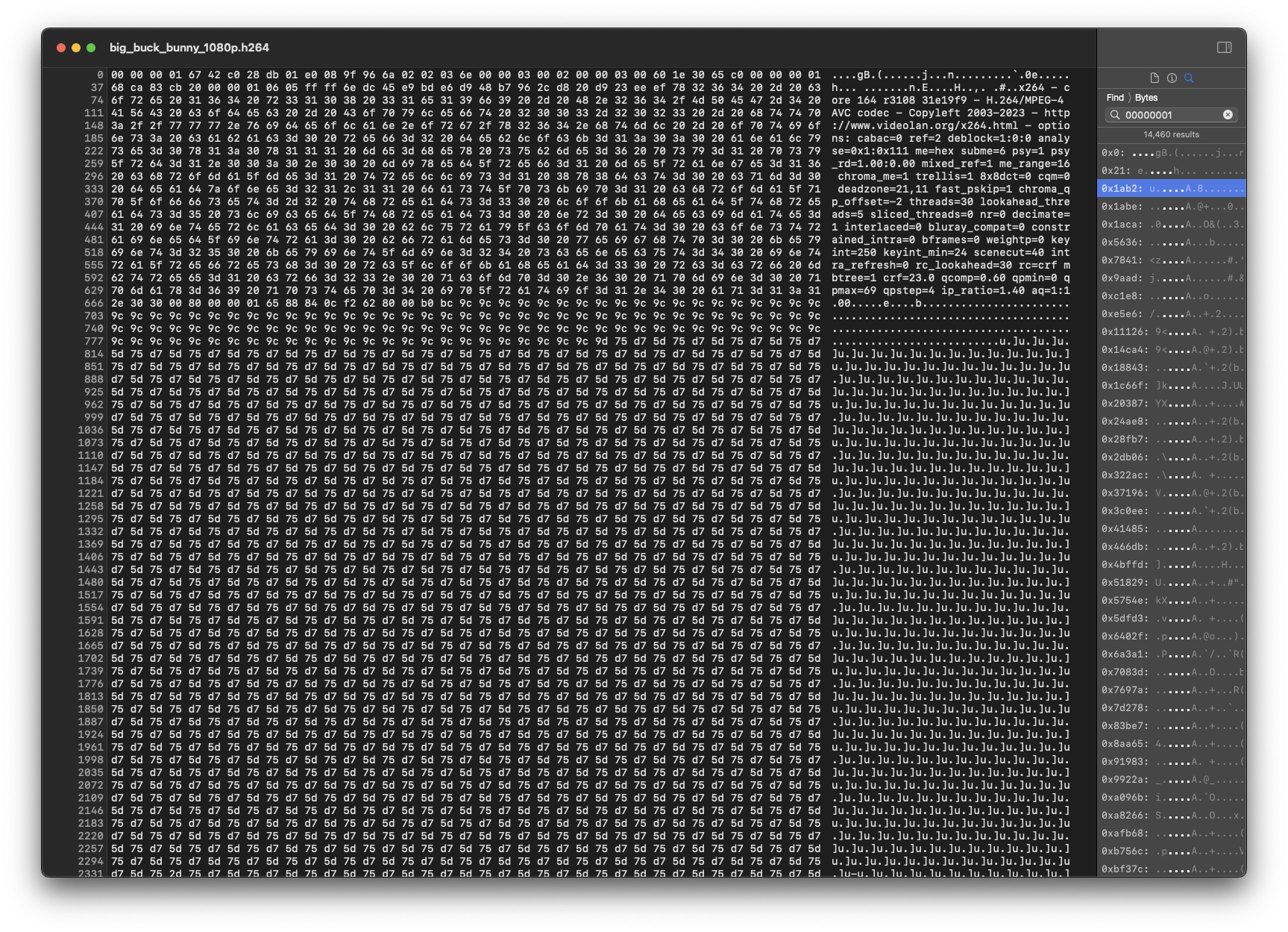

I placed the raw H264 version of Big Buck Bunny in an nginx folder so that I could access it via HTTP. All done with that. Time to code.

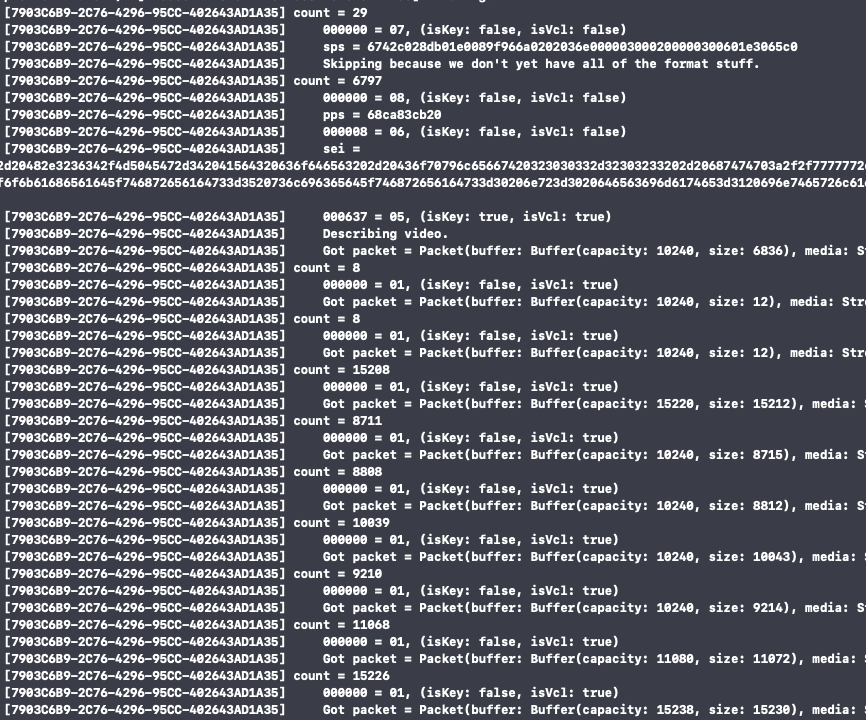

I put together the new RawStreamingSession class for this feature. It will just make a URLRequest and start streaming the received data. At a very high level, I should just need to accumulate the data, find start codes (0x00000001), parse NAL units, replace the NAL unit markers (0x000001) with 4-byte lengths and then pass it up the stack to the StreamingSession. Hopefully.

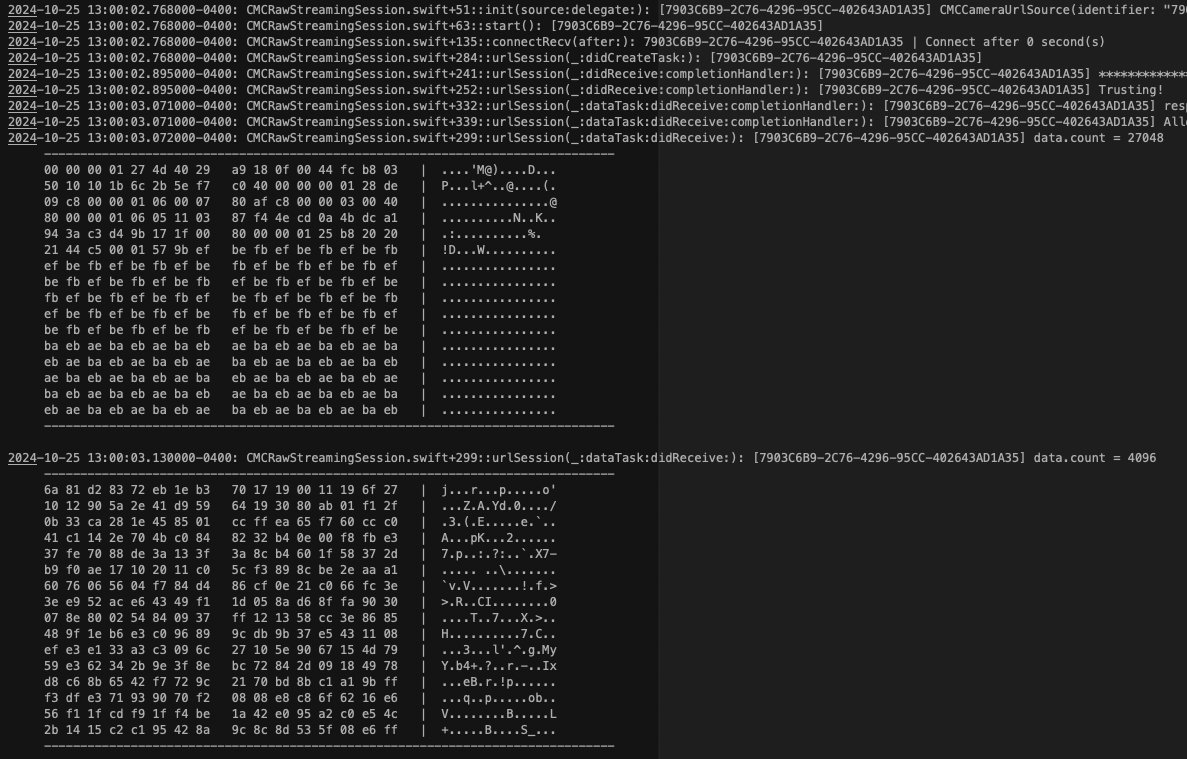

I got the data flowing, and there’s our start code. I’m not doing any parsing yet; I just need to see the data flowing first.

If you don’t use HextEdit, you should.

With the data flowing (and spamming my Xcode console), I started doing some basic buffering and parsing. I need to find the SPS and PPS NAL units first, so that I can initialize the video decoder. And I need to be able to identify key frames. The decoder gets moody if you just throw a random dependent frame at it to start with.

Finally though, I’m tweaking one last thing while the kids have invaded my office and my wife is trying to tell me something about dinner and then….

Woo!

I had run into some issues parsing the frames because the data was organized quite differently than I’ve ever seen before, when I realized I need to redo the ffmpeg export to get the video into a constrained baseline format. With that done, everything magically started working.

And that’s good enough progress for today. Also, I realized everyone was invading my office because it is Friday evening, and we eat pizza and watch a movie over here on Friday evenings.

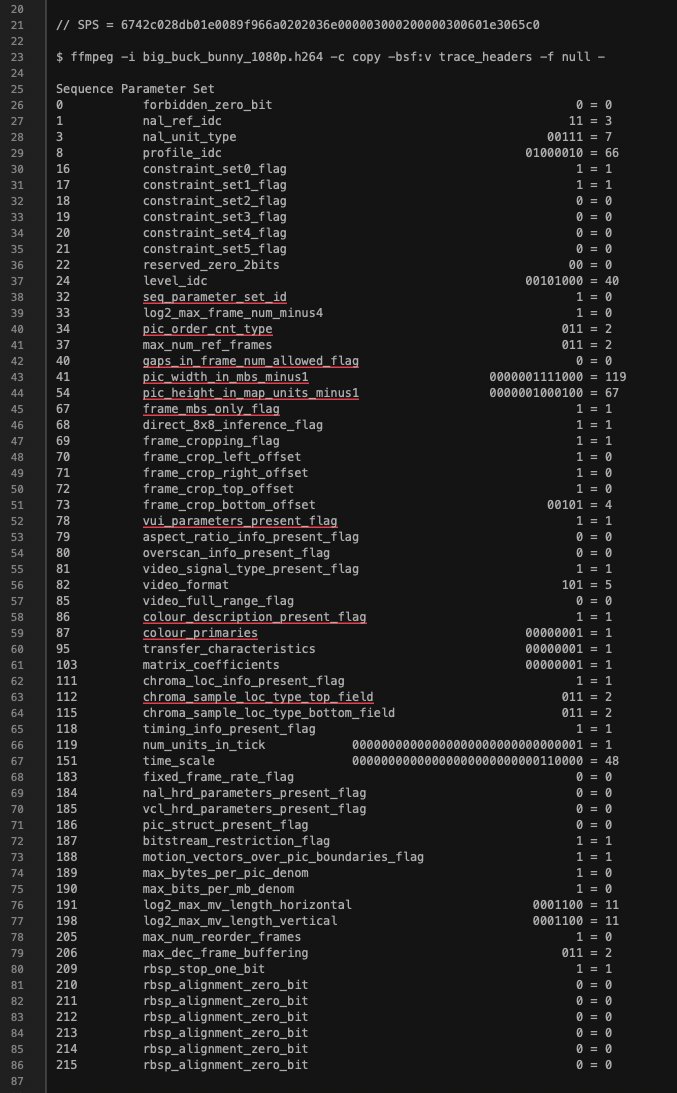

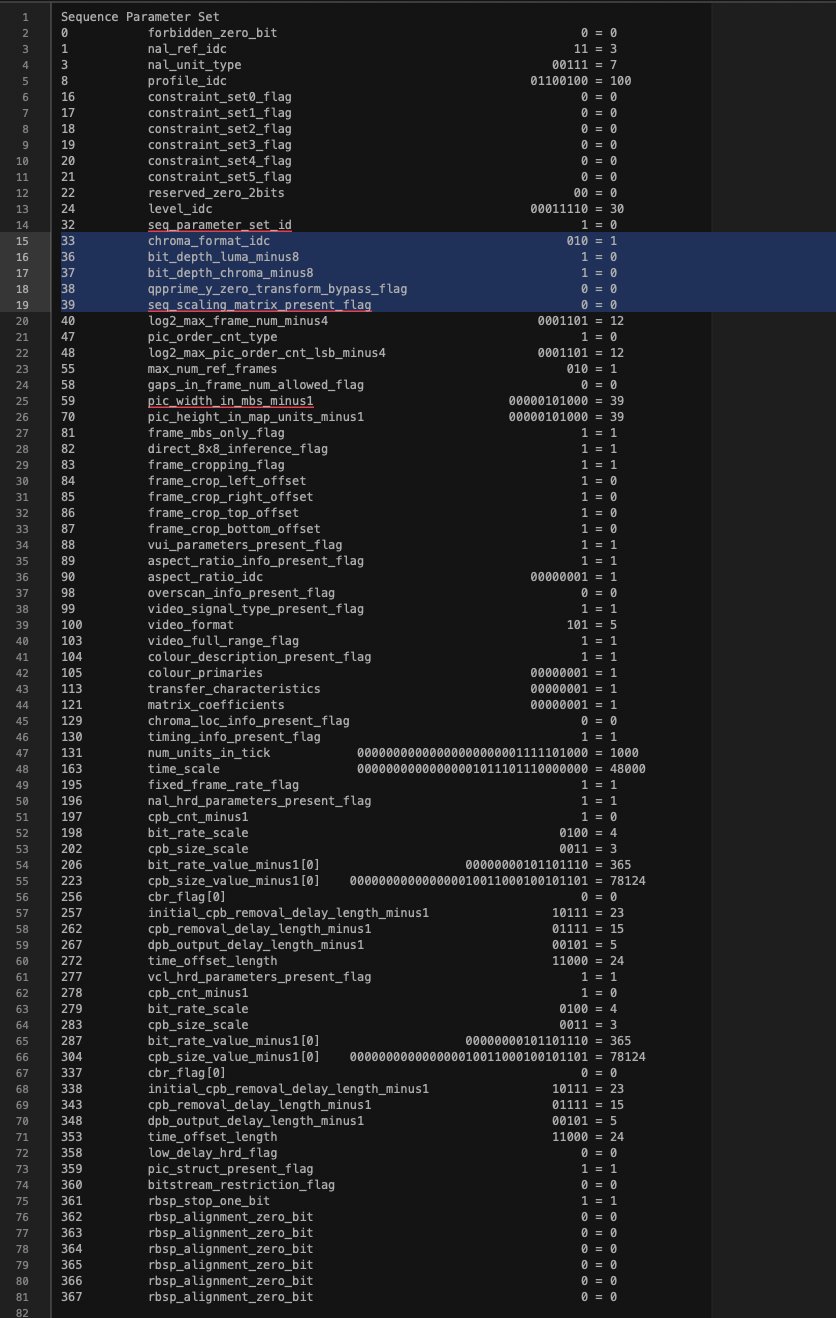

I cheated a bit getting video working yesterday: I hardcoded the video resolution and the frame rate. Today, I’m working on getting the frame timing correct. The only reason I was ever able to accomplish this is FFmpeg’s willingness to parse and dump field-by-field and bit-by-bit.

Of interest to us here is profile_idc, pic_width_in_mbs_minus1, pic_height_in_map_units_minus1, num_units_in_tick and time_scale. I should also grab the frame_crop* values, but I’m lazy. ChatGPT really did me a solid a while back when I was parsing ISO BMFF data to get MPEG-DASH support for YouTube live streaming working and I couldn’t find any good documentation online about the format. I didn’t have it write any code, but it could explain each data type and its format.

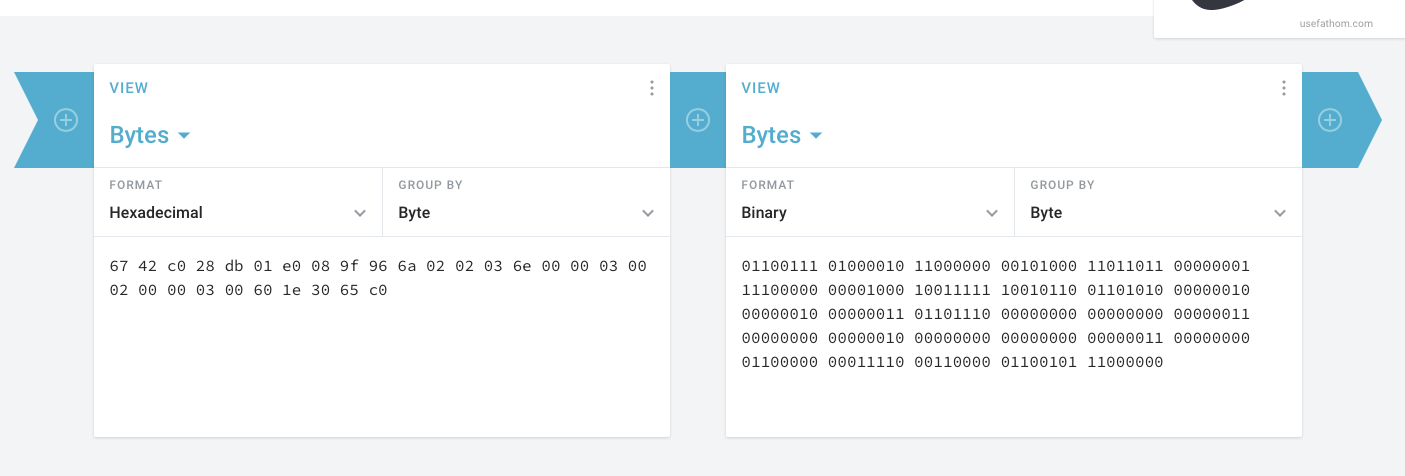

Also, check out Cryptii, which is really helpful as well. This is the SPS. On the left, in hex. On the right, in binary. The binary representation is very helpful because various values are one or perhaps several bits wide and they’re (of course) not byte-aligned. It’s better than having to think about hex in your head if you don’t have to.

Writing a parseSPS() function felt like a lot of really mundane programming so I let ChatGPT take a stab at it. I find ChatGPT to be useful for either things that a million people have done (it has a lot of training data) or things for which there are very precise specifications. Still, it’s unwillingness to be upfront about its uncertainty is always a huge time sink.

Anyway, it churned out a bunch of SPS parsing code that didn’t work. It was on the right track though. The first thing it needed was a mechanism for reading bits from a buffer. It actually did that correctly. The next thing it needed was an exponential-golomb decoder, which it got mostly correct. It didn’t actually work because it reversed the logic of one step, but discovering and fixing that error was maybe easier than writing the code myself? I dunno.

As I said though, its parseSPS() code didn’t work. Specifically, it came up with completely whacky numbers for the frame rate. Dropping into the debugger I could see that the 32 bits that it was parsing were the same as what I saw in the SPS itself, but different than the 32 bits that ffmpeg displayed.

And then it hit me.

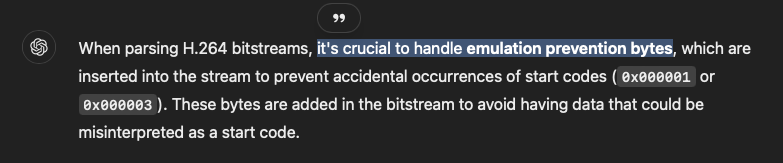

I went back to ChatGPT. “Umm, when parsing the SPS, how important would you say it is that we remove the emulation prevention bytes? And do you think we should maybe modify your solution to account for this?”

So “crucial” that you didn’t bother to do it in the first place? This is the problem.

Finally, I was able to remove the hardcoded parameters (resolution and frame rate). I improved the threading and buffering and made some performance improvements; cleaned up a few things.

Rhombus answered a few questions along the way, but it looks like there’s no such thing as a remotely accessible demo SRS system so I’ll have to get some sample video from the IT guy.

I sent him a build that would dump video to the debug log that I’ll just have to hex-decode and concatenate. It (probably) won’t play for him, but I’ll have sample data to work with, finally.

I got some logs from the IT guy, extracted the video data, decoded it and concatenated it all into a .h264 file. The moment of truth…and it didn’t play. That’s to be expected. Jumping into the debugger, I saw that the parseSPS() function bails because the expected profile_idc=66 was instead 100. I went back to FFmpeg for some help. I looked at a dump for 66 and 100 side-by-side and it looks like the primary difference is the chroma fields.

I let ChatGPT amend its earlier solution with support for chroma fields, which it managed to do correctly. Its coding style is still atrocious though.

I verified that I hadn’t broken anything with Big Buck Bunny and pushed a build to TestFlight for validation by the user.

This morning I saw an email from midnight. Paraphrasing: “I couldn’t wait for the morning. I turned on VPN and IT WORKS. I switched to high res and then added all of the remaining cameras. Everything is great.”

Relishing in success of the moment I decide to follow up with Rhombus. They’ve been CC’ed on a bunch of emails and know what’s going on. While my solution might “work” in a strict sense, does it auto-reconnect properly? Are there any compatibility issues with different camera configurations? Bit rates? Are there codec switches a user might change that differ from this one school’s setup? “What are the chances you guys would send me a camera?” Not only did I get back a nearly immediate “of course!” it came with a link to their Technology Partner Program, which is a great idea for a tech company like Rhombus.

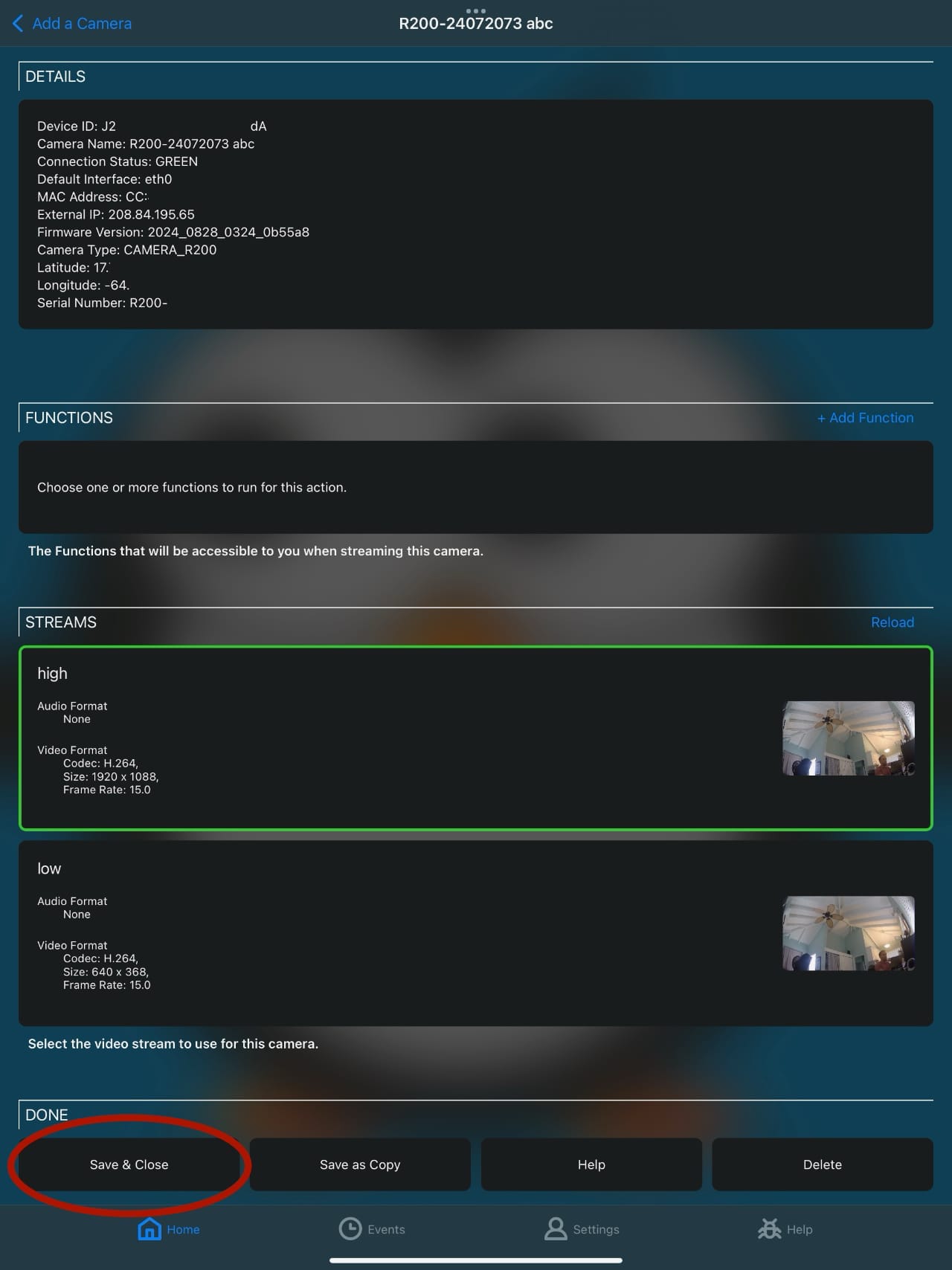

Also, I noticed that Rhombus has a fairly extensive API. Instead of the user copy-pasting a bunch of crazy long URLs into Streamie, I should add a Rhombus Integration that uses an API key and let it auto-discover your cameras.

New camera! And it’s the best kind of camera: a free camera. I suppose in one sense it did cost me several days of work, but I won’t think about it like that.

The Rhombus API is pretty straight forward. There were no surprises. I used one API call to get organization details, another to get a list of cameras, and a 3rd to get the list of RAW streams.

Streamie will now show you a list of your Rhombus cameras, which you can easily add with a couple taps. And it will discover the SRS URL without you doing any copy-paste work. Just choose the stream quality that you want and you’re done.

Created: 1 year ago

Updated: 1 year ago

Author: Curtis Jones

Streamie provides a best-in-class user experience on your iPhone, iPad, Apple TV and Apple Silicon Mac, with an intuitive user interface that makes it simple to discover, stream, record, monitor and share your HomeKit, Google Nest, Ubiquiti UniFi Protect and ONVIF-compatible IP and RTSP cameras. Streamie keeps you informed with motion event notifications and it works with most cameras using its advanced audio and video codec support. You can watch your cameras from anywhere, record 24/7 to your private NAS, remotely manage multiple locations, device permissions and seamlessly synchronize settings across your devices; configure Hubitat smart home automations, live stream to YouTube and rely on the in-app technical support system when you need help -- and you can also reach us by phone. Download Streamie today for all of your CCTV needs.