I've casually looked at the YouTube guide for MPEG-DASH ingest and thought that maybe it wouldn't be too difficult to get working, but then my eyes would always glaze over when I looked at their ATOM depictions of compliant initialization segments and media segments. Their instructions assume a lot of background knowledge, so I never made it very far in past attempts. A recent conversation with a friend coaxed to give it yet another look and it ultimately proved successful this time, although it took the better part of a week.

The most eye-opening part of the process was that I was unable to find any information online about any products that currently support MPEG-DASH ingest with YouTube. None. They might be out there, but they're certainly not putting much effort into being visible. My hope in finding such a product was to be able to "work backwards" from valid ingest data instead of blindly stumbling forwards with inadequate errors guiding my way.

One other note: I'm doing all of this work in the context of iOS / tvOS / macOS, so my hope is to make as much use of AVFoundation as possible. Or rather, to not have to perpetually include any 3rd party libraries for the sake of supporting YouTube Live Streaming. Actually, I'd like to not have to depend on AVFoundation for that matter.

The three options YouTube supports are RTMP, HLS and DASH.

Let's go ahead and just rule out RTMP right now. It's widely supported, for sure. Is it the only mechanism for Facebook Live ingest? I think it is. But at its core, it is Flash. Like 1990s Flash. I can't in good conscience perpetuate this kind of thing. So that's a no.

In a past life I wrote a custom HLS audio client. My todo list includes writing an HLS audio/video client. Based on my experience with the client side of HLS, and my complete lack of experience with DASH, I believed that DASH was generally superior in terms of implementation effort. Learning MPEG-TS instead of ISO-BMFF makes HLS vs DASH difficult for me to compare because I don't (yet) know anything about MPEG-TS. One day. I will however speculate that it was easier to cajole AVAssetWriter to produce useful output for MPEG-DASH than it would have been to do the same for HLS. “AVAssetWriter supports HLS!” Yeah, yeah. But it wants to keep audio and video in separate files, and YouTube, in its infinite wisdom, wants them squished together. So to some degree it boils down to a question of what’s easier: manually mux-ing TS files or MP4 files. Having succeeded at the latter, that’s got my vote.

If there's one thing that takes the joy out of programming something new, it's navigating Google's Console labyrinth of API keys, project ids, app keys, scopes and so on. Once you've got this sorted though, you can start the process of failing to use their APIs.

The gist of it is that we're first going to OAuth to authenticate. After that, when the user is ready to start a live stream, we'll create a Broadcast, then create a Live Stream, then bind the Stream to the Broadcast, and then use the provided ingest point to start pushing our MPEG-DASH stream.

When you're linking the account, you need to request your scopes, which should be something like:

- https://www.googleapis.com/auth/userinfo.email

- https://www.googleapis.com/auth/userinfo.profile

- https://www.googleapis.com/auth/youtube

- https://www.googleapis.com/auth/youtube.force-ssl

- https://www.googleapis.com/auth/youtube.readonly

- https://www.googleapis.com/auth/youtube.upload

You only really need "youtube" and "youtube.force-ssl", but I want a name/email to display in the UI so the user knows which account he is using.

Jumping ahead for a moment, once you're done with all of this, and all of the MPEG-DASH streaming stuff, and once your app is working and you want the rest of the world to be able to try it out, you have to start the Google Verification nightmare. This is when you learn that the button to start the OAuth process, which no doubt blends with the style of your UI, isn't branded correctly (ie, it needs to be ugly), and then the button doesn't have the correct margins, and then you need to create a 4th video tutorial that shows your project id more clearly, and.... Just brace yourself.

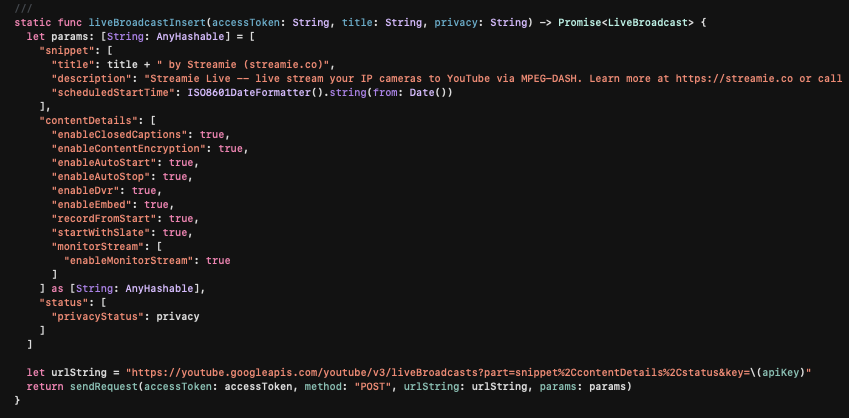

Create your shiny new broadcast.

The "enableAutoStart" and "enableAutoStop" options are particularly handy. Instead of having to monitor the stream to note when it becomes active, YouTube will automatically make it go live when the stream is healthy. And it'll shut it down when you're done.

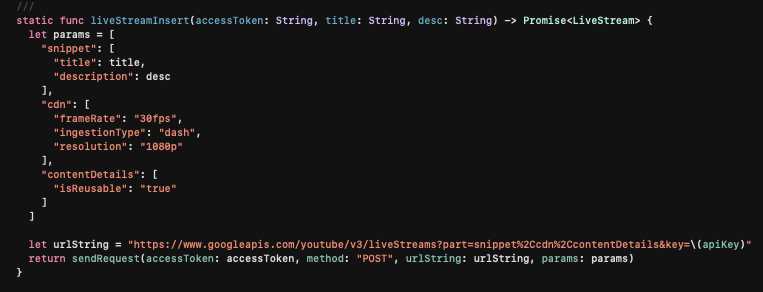

Create your shiny new live stream.

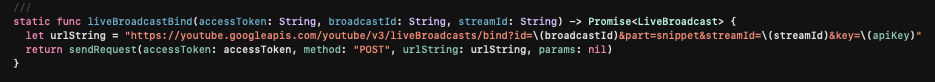

It's like they took a cue from socket programming or something, and now you need to "bind" your live stream to your broadcast.

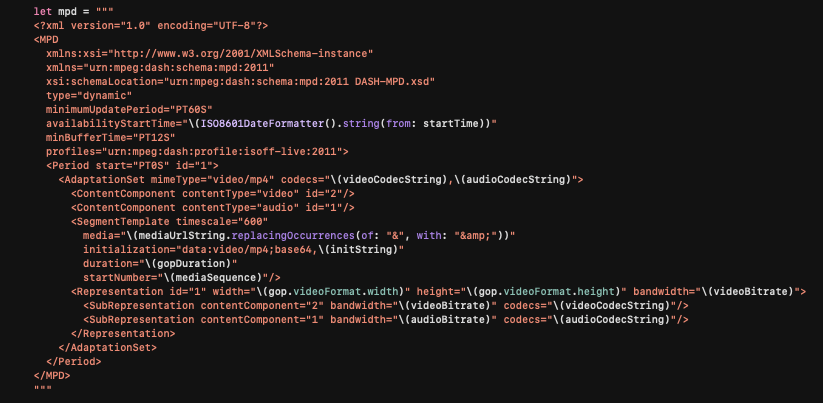

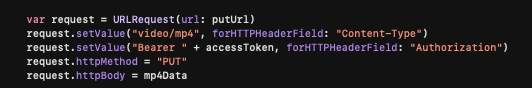

To begin your stream, and subsequently every 60s (or less), you must send an initialization segment to the ingest point. As a template, that might look something like this:

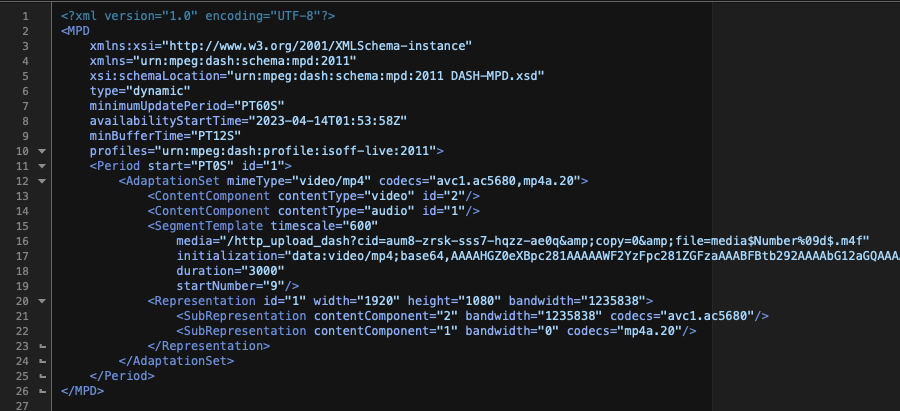

and as a literal example, something like this:

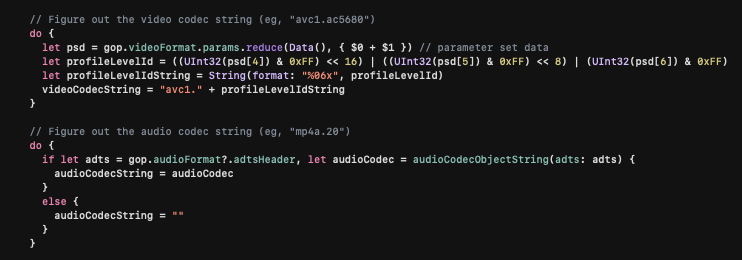

The AdaptationSet wants a specific string to identify some parameters for the video codec and audio codec. For the video track, we concatenate the parameter sets and extract the profile level id.

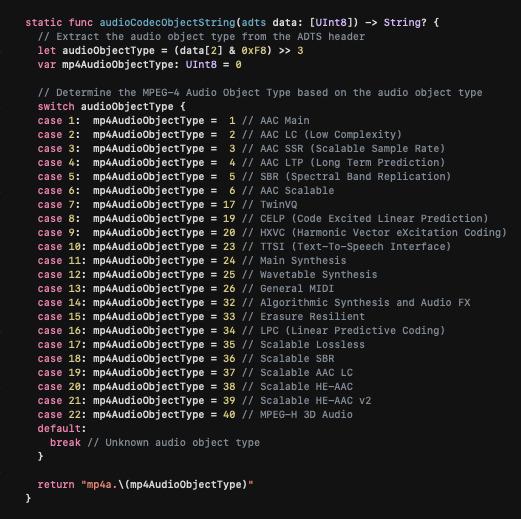

For the audio track, we take the ADTS header (remember: this is AAC-only) and decode the object type (credit where credit is due: I had ChatGPT write this tedious code for me).

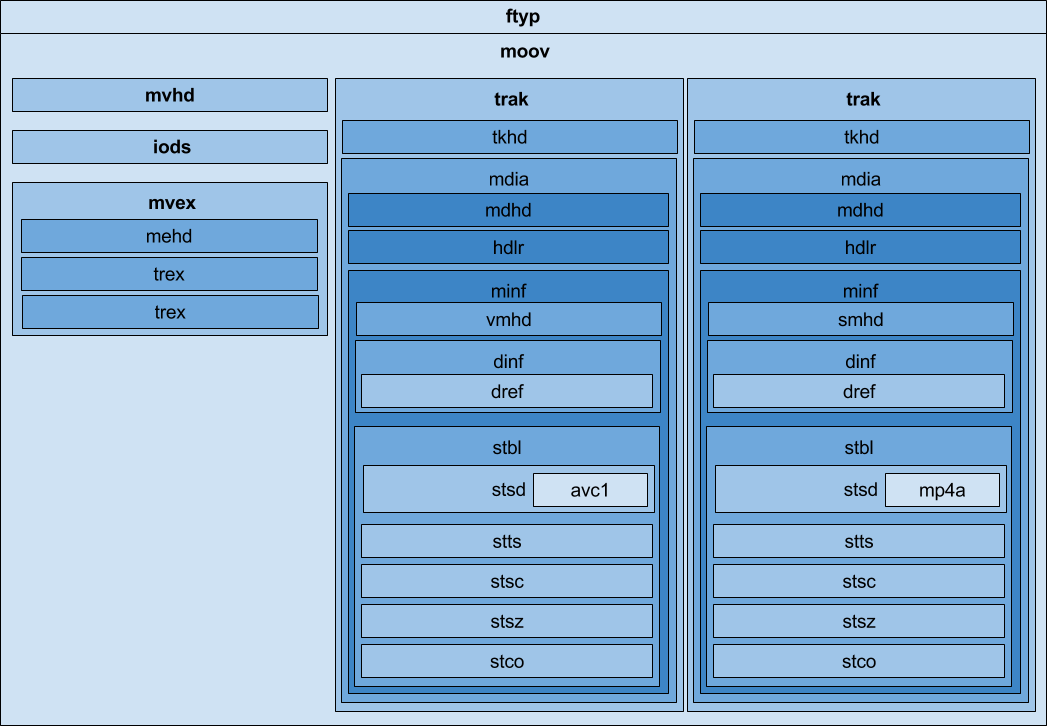

You may also have noticed that the ContentComponent values of the AdaptationSet each have a numerical id attribute. That id must correspond to the id of the trak/traf in the fragmented mpeg4 segments.

Finally, we need to deal with the initialization data itself. The initialization data is basically a base64-encoded mp4 file with a video track and an audio track, each of which has zero frames/samples. Sounds easy enough, right? Here's the rub: AVAssetWriter supports generating fragmented mpeg4 files (for the sake of HLS), but it doesn't support mux-ed output (which YouTube requires); it'll work with video, or it'll work with audio, but not both (at the same time). Furthermore, YouTube doesn’t support video-only streams, so you can’t test your video support before attempting to tackle audio + video. You have to run two instances of AVAssetWriter, get the initialization segment for each media type, and then combine those together. Combine them? Yeah....

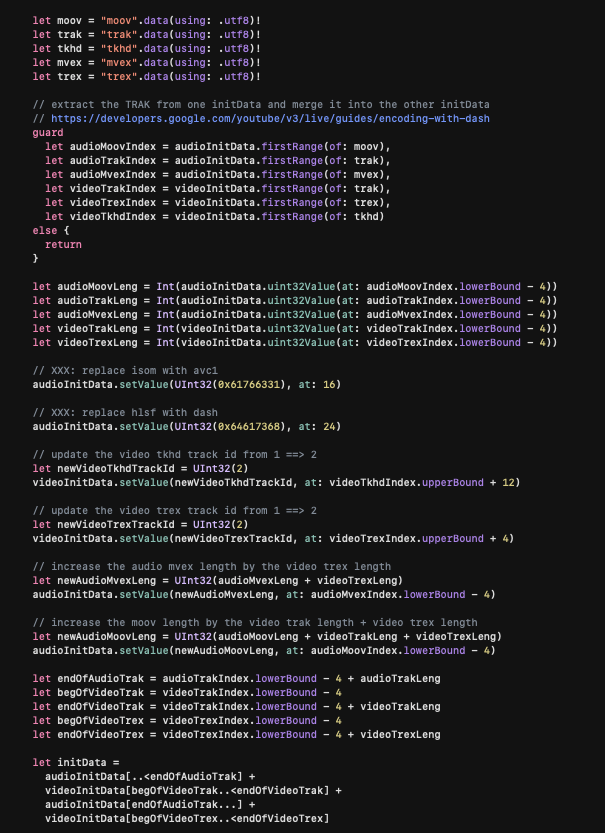

So, if you look at that atom diagram up top, you'll see that the initialization segment is an ftyp and moov. The moov contains an mvhd, iods, mvex and two trak-s. There's no subsequent mdat, because (as I mentioned earlier), there are no samples for either of the tracks. ISO-BMFF is brilliant in its simplicity. Combining the two initialization segments that AVAssetWriter outputs is just a matter of finding where some of these atoms are located and grabbing the right chunks. This code is obviously a bit brittle.

Base64 encode this initialization data. Toss it in the initialization XML. Ship it off to YouTube. And do this again every 45 seconds or so. Jumping ahead again for a moment, the process I settled on was to check if it was yet time to re-send the initialization segment immediately before we had a complete Group of Pictures to send. More on that next.

Each time you send the initialization segment to the ingest point, the only thing that needs to be updated is the startNumber, which should correspond to the next media segment you’ll send.

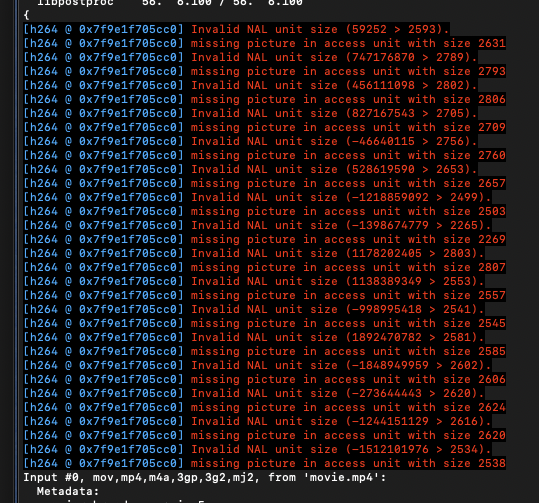

The idea here is that you should be able to create a complete, playable movie by taking this initialization segment and any number of subsequent media segments, and simply concatenating them together. When you test that later on and it doesn't play, use ffprobe to tell you what's wrong with it. It'll save you many hours of agony.

Once again, AVFoundation's HLS support works against us much like it did with the initialization segment. It'll create our fragmented mpeg4 files, but only with one media type at a time. Additionally, YouTube wants each media segment to be a single, complete Group of Pictures. You start with a key frame, and you include all of the following frames up to (but not including) the next key frame. In that same media segment, you should include all of the related audio samples.

Now is a good time to mention that YouTube's MPEG-DASH ingest only supports H.264 (AVC1) video and AAC audio. If your IP camera input source (or whatever) is a different video codec or audio codec, you'll need to transcode it. And while transcoding one or both of the media types, you'll want to keep those samples time-coordinated, obviously.

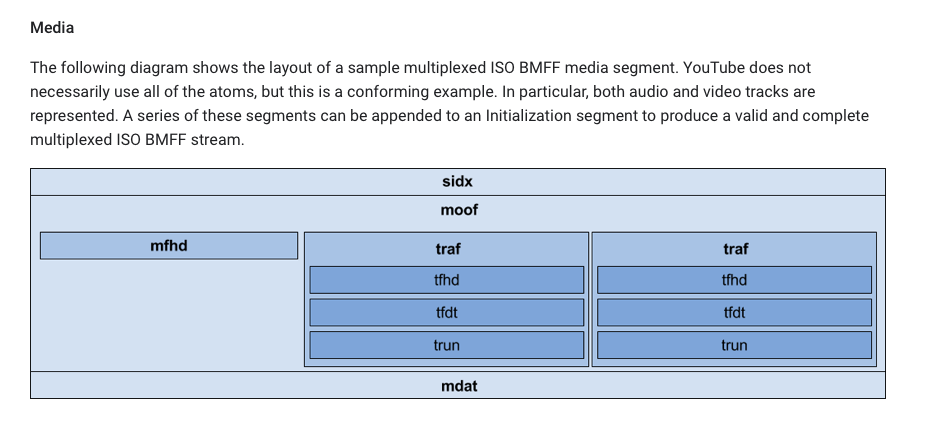

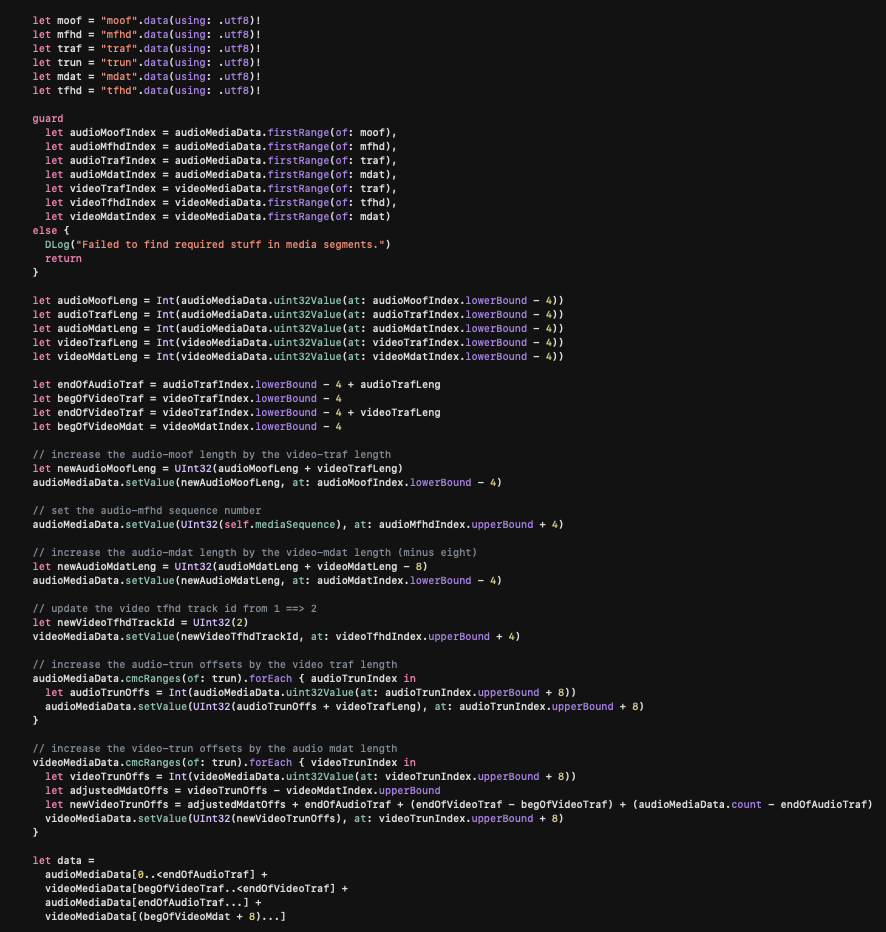

Let's say that you do all of that, then you end up with something that looks like this, except with only one traf per file, and you need to merge them into a single file. This is less fun than you might imagine.

Very much like merging the initialization segment, we just have a few atoms to work with: sidx (which we don't actually need, and which AVAssetWriter does not produce), moof and mdat. The mdat atom needs to have the concatenation of our audio data and video data. The moof has a bunch of offsets that need to be adjusted accordingly, and we need to add in the 2nd traf atom. Again, this is brittle, but it gets us up-and-running.

Now just ship it off to YouTube and keep it coming.

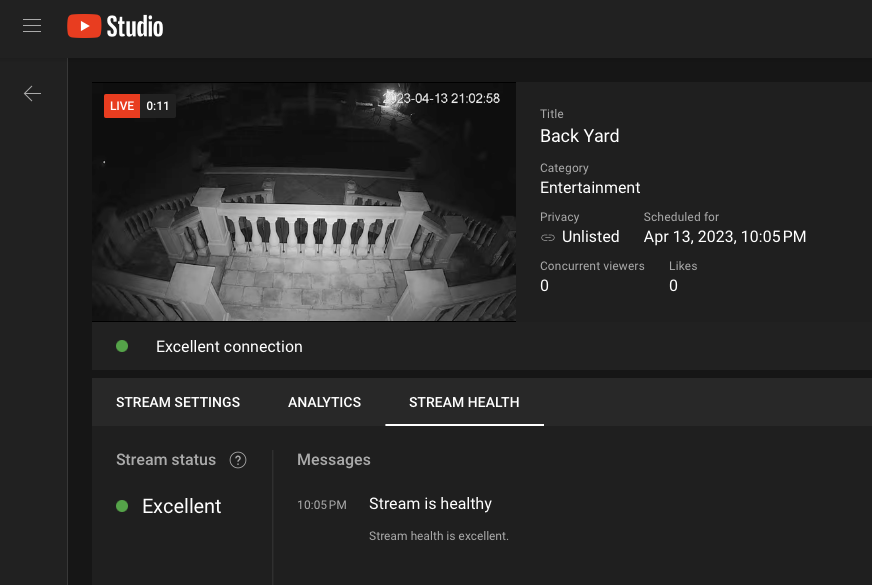

When it all finally comes together, you'll see something like this:

When you're stuck and YouTube isn't producing useful error messages, be sure that you're using something like HextEdit to verify your MP4 output, mp4dump and ffprobe. The latter is essential.

- ffprobe -i movie.mp4 -analyzeduration 2147483647 -probesize 2147483647 -flags +global_header -show_streams -show_format -print_format json

I don't have a full grasp of what AVAssetWriter is actually doing when it outputs the fragmented media files. Is it just concatenating the frames and noting each frame offset in the traf atom? Does it add timing information to each sample? Whatever the case, it feels like an incremental step to remove the AVFoundation dependency entirely, and with it a non-trivial amount of rather brittle code that goes into merging the audio and video media segments. I'll tackle that later though.

The Streamie v3.13.2 release notes can be found here here, which includes the first beta version of YouTube Live Streaming support.

Created: 2 years ago

Updated: 1 year ago

Author: Curtis Jones

Streamie provides a best-in-class user experience on your iPhone, iPad, Apple TV and Apple Silicon Mac, with an intuitive user interface that makes it simple to discover, stream, record, monitor and share your HomeKit, Google Nest, Ubiquiti UniFi Protect and ONVIF-compatible IP and RTSP cameras. Streamie keeps you informed with motion event notifications and it works with most cameras using its advanced audio and video codec support. You can watch your cameras from anywhere, record 24/7 to your private NAS, remotely manage multiple locations, device permissions and seamlessly synchronize settings across your devices; configure Hubitat smart home automations, live stream to YouTube and rely on the in-app technical support system when you need help -- and you can also reach us by phone. Download Streamie today for all of your CCTV needs.